The biggest lesson I’m taking away is that building software isn’t only about making something work, it’s about making something reliable and measurable. When I started the program, I focused on the features and implementation, and by the end, I’m thinking more like an evaluator and I treat evidence as part of the product.

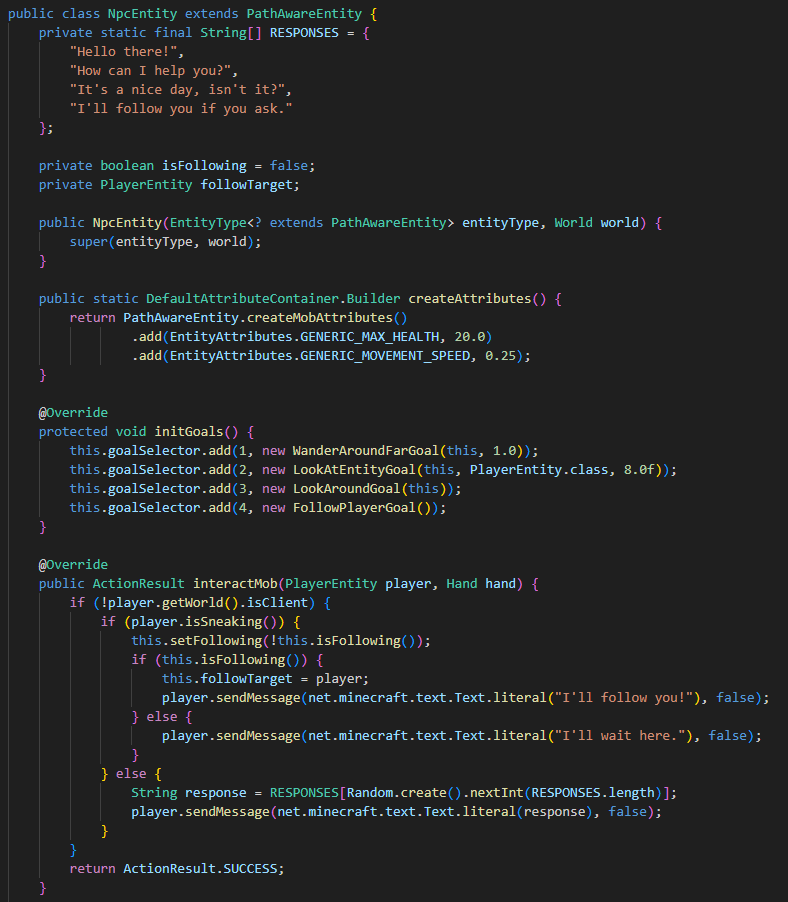

My capstone project became the clearest representation of this change. I built a context-aware NPC Agent for Minecraft that combines retrieval-augmented generation with environmental perception, persistent memory, and constrained behavior execution. The system uses a Mineflayer agent connected to a Python backend, and it was designed so that the agent could act in the world safely while generating telemetry and survey data that I could use to support conclusions.

As I reviewed my earlier coursework and compared it to my final capstone, I noticed that the program changed how I approach both architecture and evaluation. I became more intentional about separating systems into modules, and logging the correct signals so I could interpret the outcomes conservatively. I also learned how much evaluation depends on task design. Some tasks were too static to show any meaningful differences, while the behaviors that were more prone to failure revealed the value of memory and retrieval more clearly.

Each course contributed to the final result by strengthening either my engineering foundation or my ability to evaluate what I built. Some classes helped with core software design and implementation, while others pushed me to think more critically about measurement.

Going forward, I plan to continue improving the project as a portfolio piece and expand its evaluation. My next steps include refining the performance, testing with more complex tasks where memory matters more, and scaling the design to produce stronger evidence. Overall, I’m leaving the program with a clearer understanding that building software and evaluating software are intertwined when the goal is to produce work that holds up under scrutiny.